Hello Dimitry,

yes, the UI became very slow after a syste reboot.

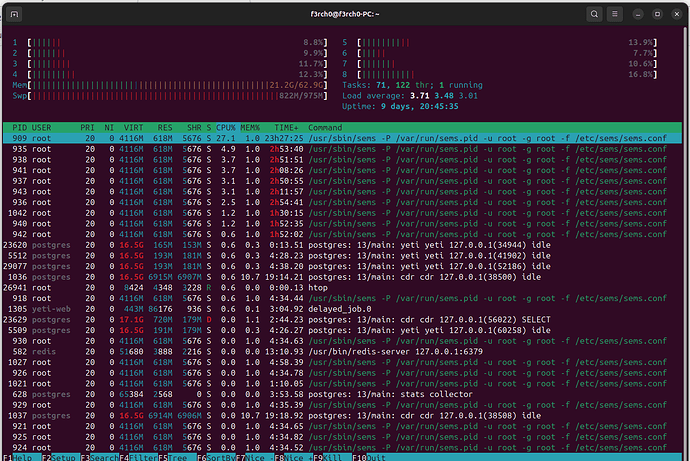

I am posting an htop capture:

I searched for errors in yeti-web.log and it has no errors. As an example, today, the web login, lasted a long time to authenticate…a couple of minutes…and when it happened the yeti-web log showed this:

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Rendered charts/_nodes.html.erb (0.9ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Rendered charts/_profit.html.erb (0.3ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Rendered charts/_duration.html.erb (0.2ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Rendered active_admin/_footer.html.arb (83.2ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Rendered vendor/bundle/ruby/2.6.0/gems/activeadmin-2.7.0/app/views/active_admin/page/index.html.arb (507171.2ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [38e66269-3a0d-4858-a33e-afced677bc42] Completed 200 OK in 521954ms (Views: 505691.8ms | ActiveRecord: 1947.6ms)

Apr 25 09:52:08 pochi yeti-web[25761]: [3c6b617c-e349-4346-87b3-cff8a5723354] Started GET “/remote_stats/nodes.json” for 200.10.117.67 at 2025-04-25 09:52:08 -0300

Apr 25 09:52:09 pochi yeti-web[25761]: [3c6b617c-e349-4346-87b3-cff8a5723354] Processing by RemoteStatsController#nodes as JSON

Apr 25 09:52:15 pochi yeti-web[25761]: [3c6b617c-e349-4346-87b3-cff8a5723354] Completed 200 OK in 6982ms (Views: 0.3ms | ActiveRecord: 2.5ms)

no errors!

I also searched for errors the postgresql log and only found theese:

2025-04-23 07:35:59.259 -03 [2184] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 07:38:02.589 -03 [11311] yeti@yeti ERROR: canceling statement due to user request

2025-04-23 07:39:06.148 -03 [11309] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 07:39:33.207 -03 [11306] yeti@yeti ERROR: canceling statement due to user request

2025-04-23 07:43:59.198 -03 [19412] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 07:50:51.589 -03 [19428] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 07:59:00.403 -03 [19446] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 08:03:27.145 -03 [19465] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 08:09:07.022 -03 [19492] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 08:10:10.352 -03 [19511] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:13.945 -03 [19529] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:13.945 -03 [19531] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:30.534 -03 [29046] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:40.885 -03 [29052] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:40.885 -03 [29050] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:53:52.658 -03 [29057] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:54:10.319 -03 [19536] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:54:18.877 -03 [29060] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:54:35.652 -03 [19533] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-23 19:54:49.951 -03 [29066] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-24 06:47:45.219 -03 [29079] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-24 06:49:49.446 -03 [29081] yeti@yeti ERROR: canceling statement due to user request

2025-04-24 06:52:59.740 -03 [5487] yeti@yeti ERROR: canceling statement due to statement timeout

2025-04-24 18:34:24.974 -03 [14899] ERROR: canceling autovacuum task

2025-04-25 05:34:08.795 -03 [29083] yeti@yeti ERROR: canceling statement due to statement timeout

I do not know if that could tell us something about the slowness we are experiencing on UI.

Where I also found errors is in sems-main.log:

Apr 25 08:05:02 pochi sems[909]: [936/core/AmMediaTransport.cpp:935] ERROR: [0x7f5c68117a20] sendto(244,0x7f5c98e112a0,172,0,0x7f5c68019820,16): errno: 11, raddr:‘192.168.2.231’, type: 0

Apr 25 08:05:03 pochi sems[909]: [936/core/AmRtpConnection.cpp:102] ERROR: [0x7f5c680197e0] AmStreamConnection::send: ret: -1. r_addr:‘192.168.2.231’:20510, r_host:‘192.168.2.231’, r_port: 20510

Apr 25 08:05:03 pochi sems[909]: [936/core/AmRtpStream.cpp:1372] ERROR: [0x7f5c680d6580] while sending RTP packet.

Apr 25 08:05:47 pochi sems[909]: [917/core/AmBasicSipDialog.cpp:601] ERROR: could not find any transaction matching reply: [code:408;phrase:[Timeout];i:1-66DA3815-680B6C2C0007187B-9A285700;cseq:10;from-tag:1-62EF608F-680B6C2C0007187A-9A285700;]

Apr 25 08:05:48 pochi sems[909]: [919/core/AmBasicSipDialog.cpp:601] ERROR: could not find any transaction matching reply: [code:408;phrase:[Timeout];i:1-6D027D72-680B6C2C00074EF9-9A083700;cseq:10;from-tag:1-541FC202-680B6C2C00074EF8-9A083700;]

Apr 25 08:05:48 pochi sems[909]: [913/core/AmBasicSipDialog.cpp:601] ERROR: could not find any transaction matching reply: [code:408;phrase:[Timeout];i:1-603A2ED0-680B6C2F00074665-99275700;cseq:10;from-tag:1-42CFBEA5-680B6C2F00074664-99275700;]

Apr 25 08:06:04 pochi sems[909]: [935/core/AmMediaTransport.cpp:935] ERROR: [0x7f5c502ca3d0] sendto(165,0x7f5c98f3f2a0,172,0,0x7f5c50055d70,16): errno: 11, raddr:‘192.168.2.120’, type: 0

Apr 25 08:06:04 pochi sems[909]: [935/core/AmRtpConnection.cpp:102] ERROR: [0x7f5c50055d30] AmStreamConnection::send: ret: -1. r_addr:‘192.168.2.120’:33642, r_host:‘192.168.2.120’, r_port: 33642

Apr 25 08:06:04 pochi sems[909]: [935/core/AmRtpStream.cpp:1372] ERROR: [0x7f5c50452c70] while sending RTP packet.

Apr 25 09:11:27 pochi sems[909]: [928/core/sip/trans_layer.cpp:1611] ERROR: Trying to cancel a request while in TERMINATED_200 state; inv_cseq: 10, i:1-1D32FBDD-680B7BCC000B1E82-9977A700

Apr 25 09:27:28 pochi sems[909]: [916/core/AmB2BSession.cpp:517] ERROR: BUG: media session is missing, can’t update local SDP

Apr 25 09:31:12 pochi sems[909]: [932/core/sip/trans_layer.cpp:1599] ERROR: Trying to cancel a request while in TS_COMPLETED state; inv_cseq: 10, i:1-413503DC-680B80670007B808-99376700

Apr 25 09:35:58 pochi sems[909]: [922/core/sip/trans_layer.cpp:1611] ERROR: Trying to cancel a request while in TERMINATED_200 state; inv_cseq: 10, i:1-5FE0D6FD-680B8189000CE07E-99D80700

Apr 25 09:42:25 pochi sems[909]: [916/core/AmSipDialog.cpp:803] ERROR: trying to ACK a non-existing transaction (cseq=10;local_tag=1-0BCA2269-680B830C00050405-9A386700)

Apr 25 09:42:25 pochi sems[909]: [916/core/AmB2BSession.cpp:976] ERROR: dlg->send_200_ack() failed, local_tag = 1-0BCA2269-680B830C00050405-9A386700

Apr 25 09:48:52 pochi sems[909]: [924/core/sip/trans_layer.cpp:1599] ERROR: Trying to cancel a request while in TS_COMPLETED state; inv_cseq: 10, i:1-28A69D45-680B84AE0001D96F-99B7E700

Apr 25 09:50:08 pochi sems[909]: [921/core/sip/trans_layer.cpp:1611] ERROR: Trying to cancel a request while in TERMINATED_200 state; inv_cseq: 10, i:1-2C3AE7E6-680B84EA000957C2-99E81700

Apr 25 09:50:17 pochi sems[909]: [922/core/sip/trans_layer.cpp:1599] ERROR: Trying to cancel a request while in TS_COMPLETED state; inv_cseq: 10, i:1-365D8F6C-680B84E1000CE75A-99D80700

Apr 25 09:50:28 pochi sems[909]: [928/yeti:resources/ResourceCache.cpp:533] ERROR: exception: can’t get connection from read redis pool 0

Apr 25 09:50:28 pochi sems[909]: [928/yeti:resources/ResourceControl.cpp:282] ERROR: cache error reject_on_error = 0

Apr 25 09:50:28 pochi sems[909]: [932/yeti:resources/ResourceCache.cpp:533] ERROR: exception: can’t get connection from read redis pool 0

Apr 25 09:50:28 pochi sems[909]: [932/yeti:resources/ResourceControl.cpp:282] ERROR: cache error reject_on_error = 0

Apr 25 09:50:28 pochi sems[909]: [924/yeti:resources/ResourceCache.cpp:533] ERROR: exception: can’t get connection from read redis pool 0

Apr 25 09:50:28 pochi sems[909]: [924/yeti:resources/ResourceControl.cpp:282] ERROR: cache error reject_on_error = 0

Apr 25 09:50:28 pochi sems[909]: [927/yeti:resources/ResourceCache.cpp:533] ERROR: exception: can’t get connection from read redis pool 0

Apr 25 09:50:28 pochi sems[909]: [927/yeti:resources/ResourceControl.cpp:282] ERROR: cache error reject_on_error = 0

Apr 25 10:04:28 pochi sems[909]: [1042/core/AmSessionContainer.cpp:374] ERROR: 482 Loop Detected

Hope all this could give us a clue where the bottleneck is…if there is one…

Thank you!

Fernando